🌍 INCLUDE: Evaluating Multilingual Language Understanding with Regional Knowledge

Nov 2024

I am Angelika Romanou, a PhD student in EPFL (Ecole Polytechnique Fédérale de Lausanne) in Switzerland working on Artificial Intelligence and Natural Language Processing (NLP).

I am co-adviced by Prof. Antoine Bosselut @NLPLab and Prof. Karl Aberer @LSIR.

Currently, I am a Research Scientist Intern at Meta FAIR, where I work on robustness evaluation and post-training of LLMs, developing methods to make them less brittle across prompts and contexts.

My research focuses on building reliable AI systems by closing the loop between evaluation and post-training. I develop evaluation pipelines that target complex reasoning, long-context understanding, and prompt sensitivity, exposing where models fail. These insights drive the design of post-training interventions that directly address identified weaknesses, improve robustness and transfer across languages, with demonstrated gains at scale.

Before my PhD, I gained almost seven years of industry experience as a data and machine learning engineer in R&D departments. I have also guest lectured and I am currently TAing CS-552: Modern Natural Language Processing course in EPFL.

When not in the lab, I like to play tennis 🎾 or relax by the water with a nice book 📚.

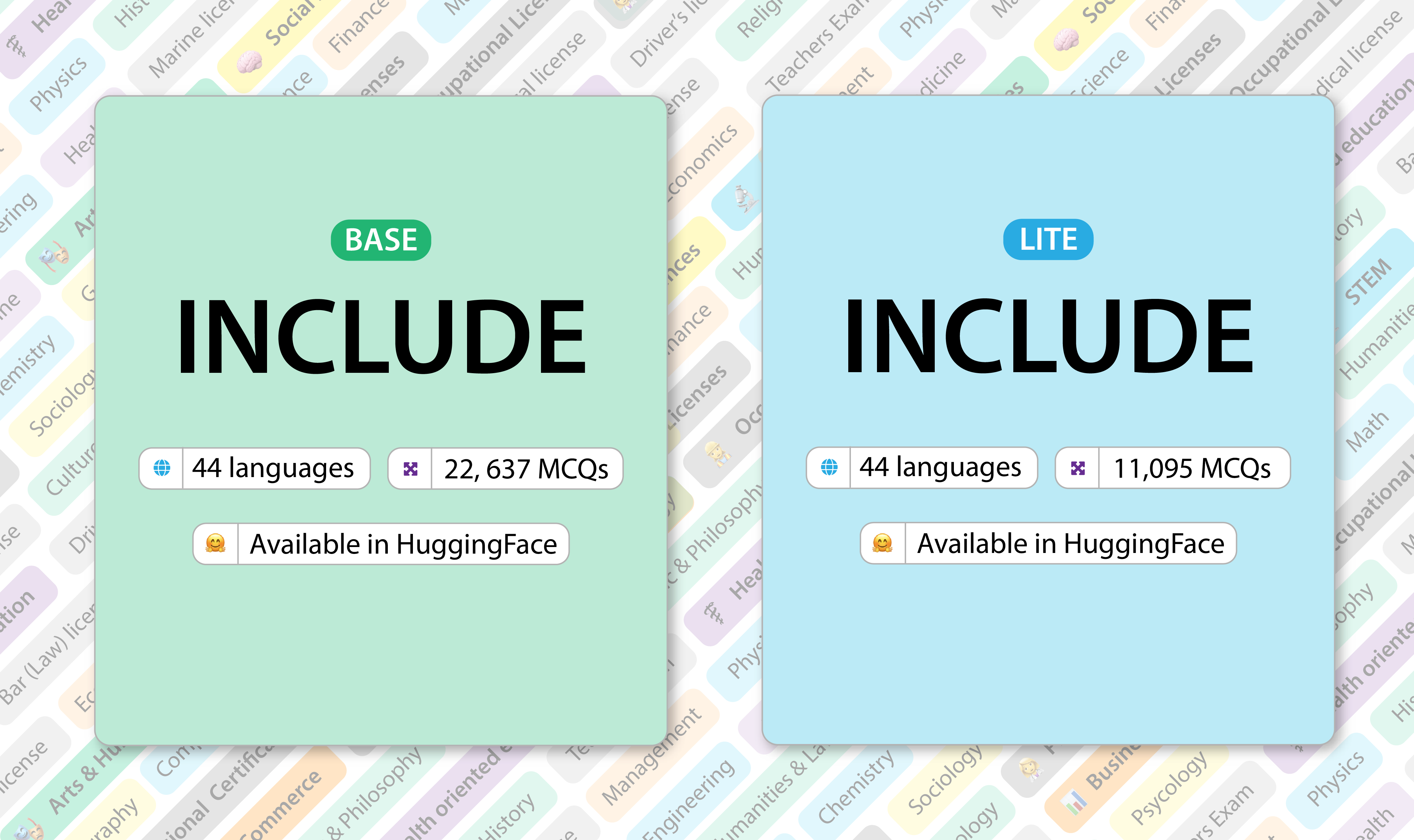

🚨 LATEST BENCHMARK: INCLUDE, a multilingual LLM evaluation benchmark spanning 44 languages.

Download and use INCLUDE here.

Read the paper here.

🚨 LATEST MODEL: Apertus-70B, a suite of fully open-source multilingual LLMs.

Download and use Apertus models here.

Read the paper here.

✨ MEDICAL-ALIGNED LLM: Meditron-70B, a suite of fully open-source medical LLMs.

Download and use Meditron here.

Read the paper here.

Nov 2024

Jan 2024

Jun 2023

Aug 2021

Mar 2020